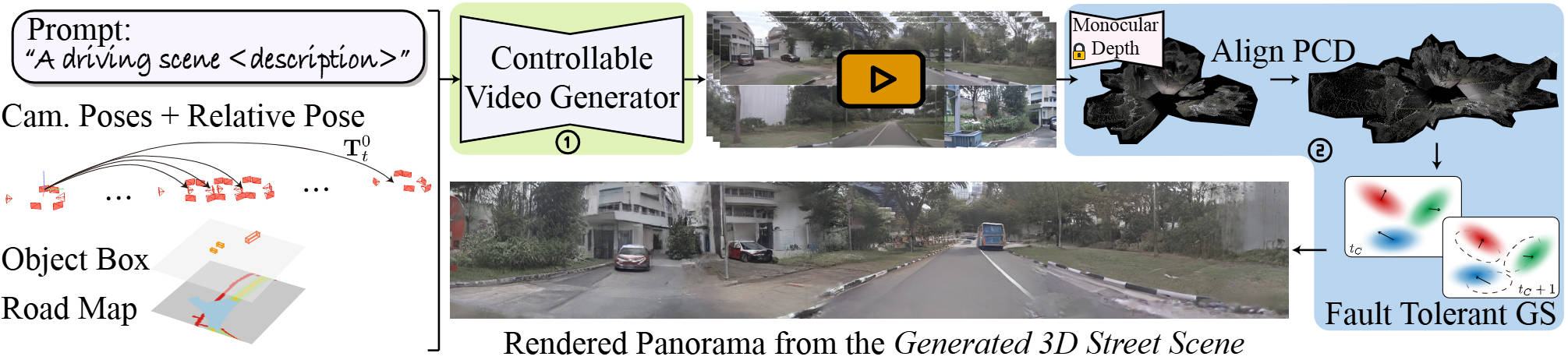

Controllable generative models for images and videos have seen significant success, yet 3D scene generation, especially in unbounded scenarios like autonomous driving, remains underdeveloped. Existing methods lack flexible controllability and often rely on dense view data collection in controlled environments, limiting their generalizability across common datasets (\eg, nuScenes). In this paper, we introduce MagicDrive3D, a novel framework for controllable 3D street scene generation that combines video-based view synthesis with 3D representation (3DGS) generation. It supports multi-condition control, including road maps, 3D objects, and text descriptions. Unlike previous approaches that require 3D representation before training, MagicDrive3D first trains a multi-view video generation model to synthesize diverse street views. This method utilizes routinely collected autonomous driving data, reducing data acquisition challenges and enriching 3D scene generation. In the 3DGS generation step, we introduce Fault-Tolerant Gaussian Splatting to address minor errors and use monocular depth for better initialization, alongside appearance modeling to manage exposure discrepancies across viewpoints. Experiments show that MagicDrive3D generates diverse, high-quality 3D driving scenes, supports any-view rendering, and enhances downstream tasks like BEV segmentation, demonstrating its potential for autonomous driving simulation and beyond.

left: bbox condition (one of our inputs). right: 3D scene generated by MagicDrive3D.

All the 3D scenes are fully generated MagicDrive3D without any input camera

views (NOT reconstruction).

If the videos on the left and right are out of sync, please refresh the page.

For controllable street 3D scene generation, MagicDrive3D decomposes the task into two steps: ① conditional multi-view video generation, which tackles the control signals and generates consistent view priors to the novel scene; and ② Gaussian Splatting generation with our Enhanced GS pipeline, which supports various viewpoint rendering (e.g., panorama).

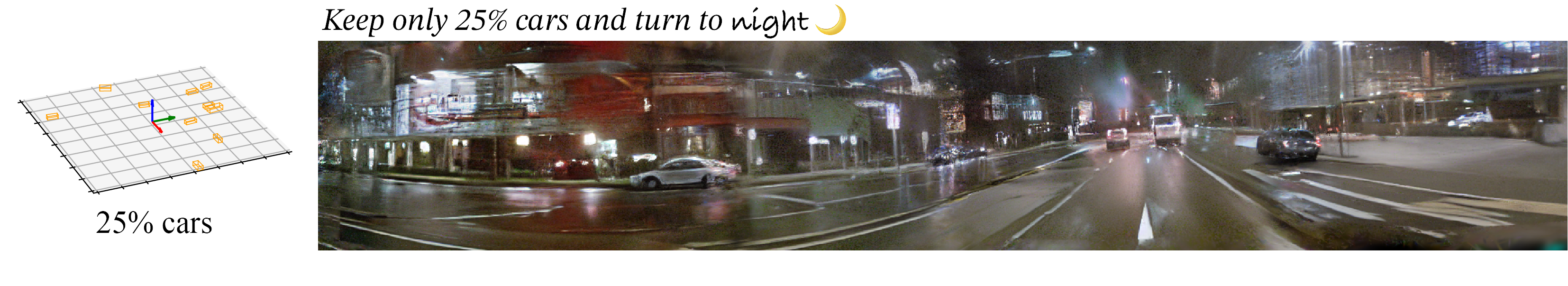

Precise control over objects and some road sematics is available by MagicDrive3D. Besides, text control is also applicable!

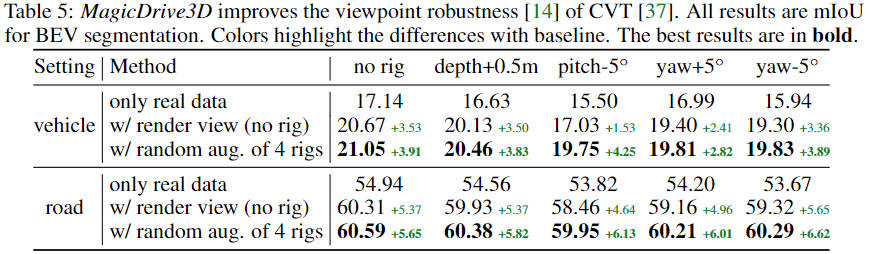

Controllable street scene generation ability makes MagicDrive3D a powerful data engine. We show how generated scenes can help to improve the viewpoint robustness on CVT.

Video generation proposed by MagicDrive3D make it possible for static scene generation (left: although the data is collected with scene dynamic, we can generate static scene videos), facilitating scene 3DGS generation (right: we propose improved FTGS pipeline which performs much better than the original 3DGS). It is like creating novel bullet-time scenes from driving dataset.

Video will start from the beginning on switching.

left/above: multi-view video for static scene generated by the 1st step of

MagicDrive3D.

right/below: final generated

3D scene from MagicDrive3D (click button to

change between Bbox and 3DGS ablation).